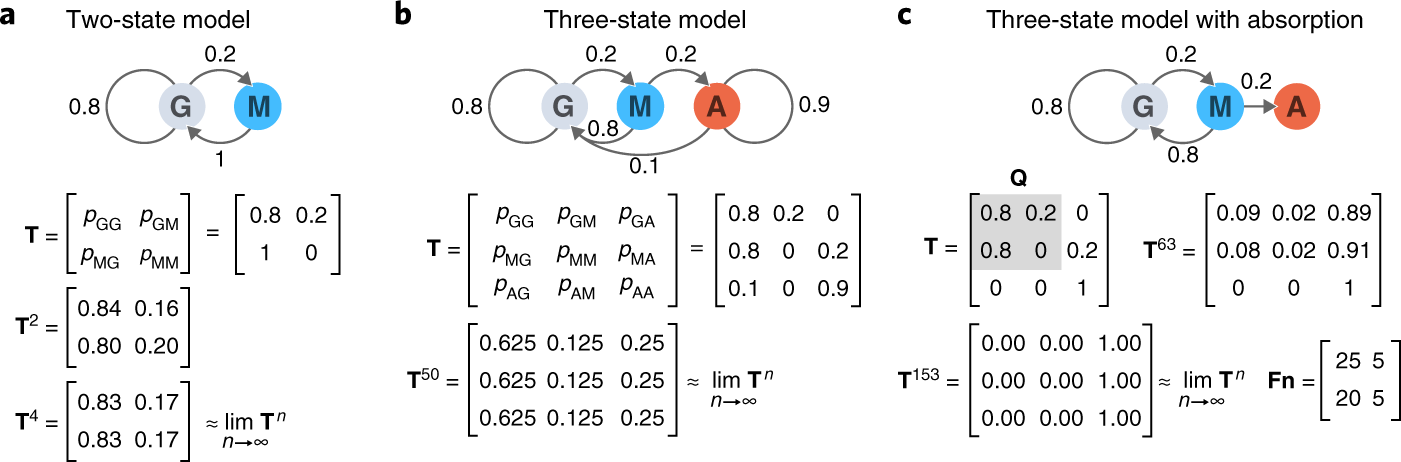

SOLVED: Consider a Markov chain with state space defined in the following graph: 3 1 2 At each node; the chain chooses a state that (directly) linked with the current state at

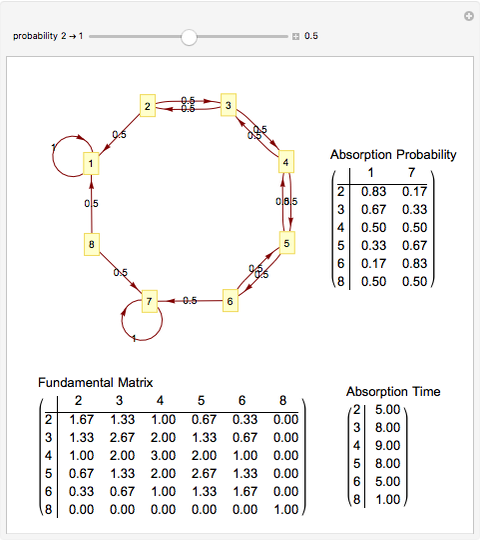

random variable - How can I compute expected return time of a state in a Markov Chain? - Cross Validated

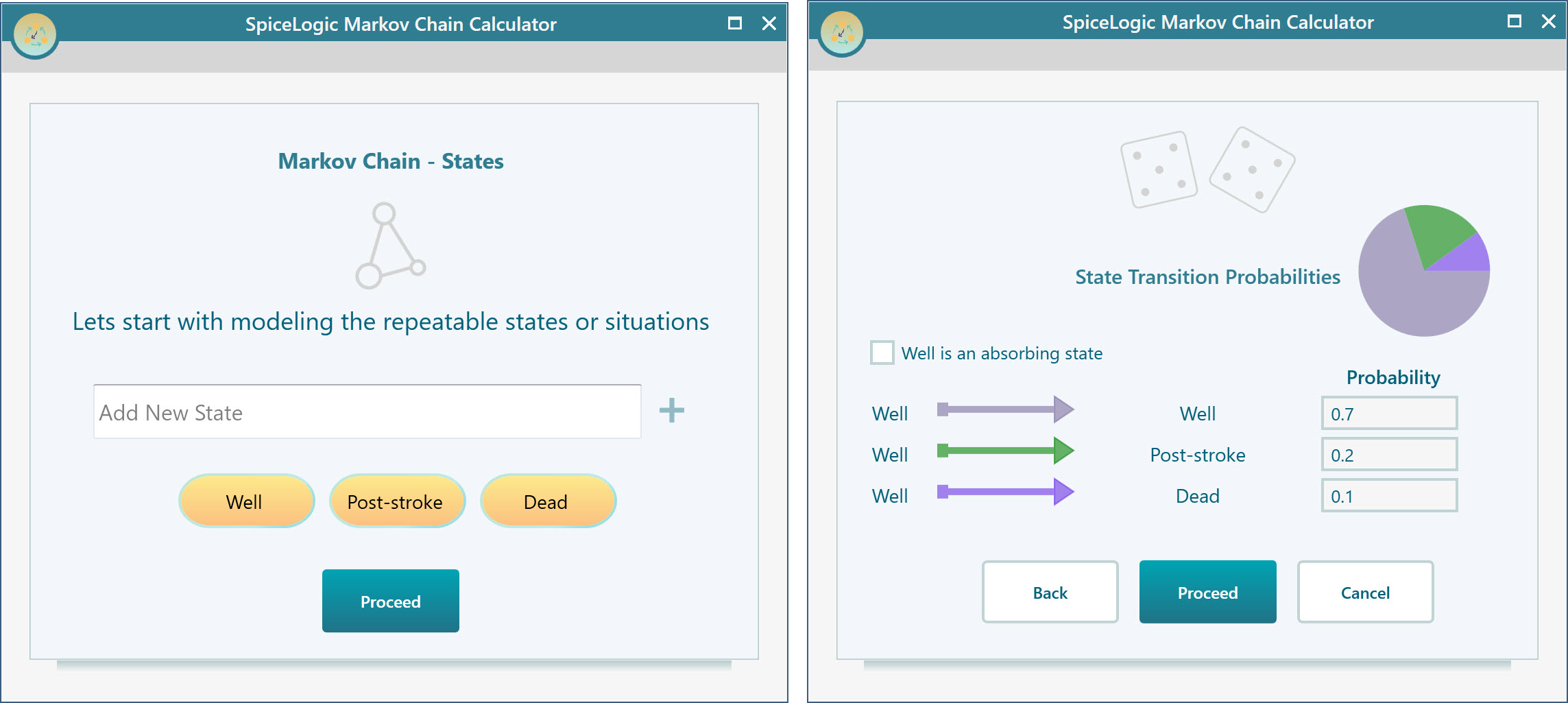

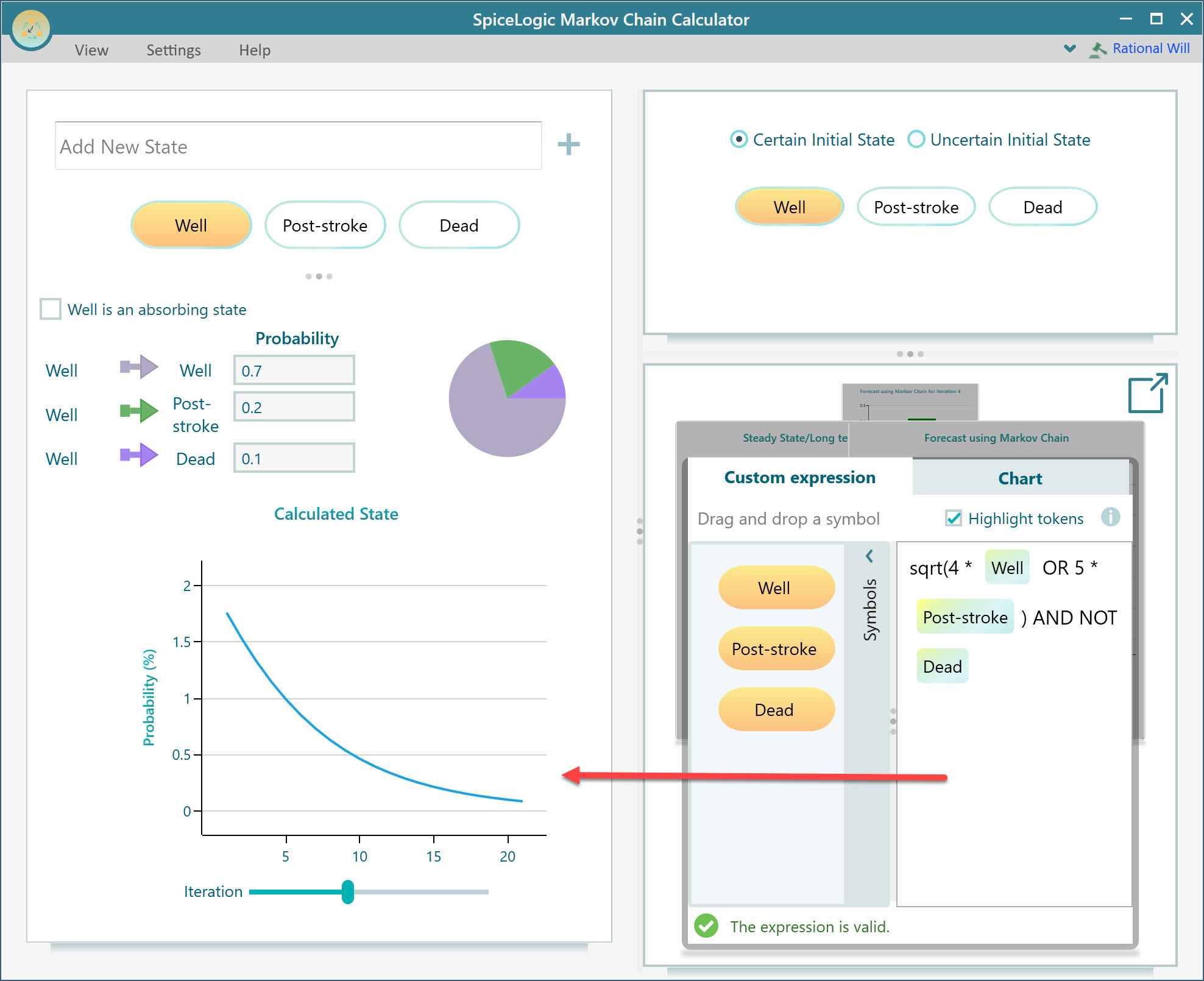

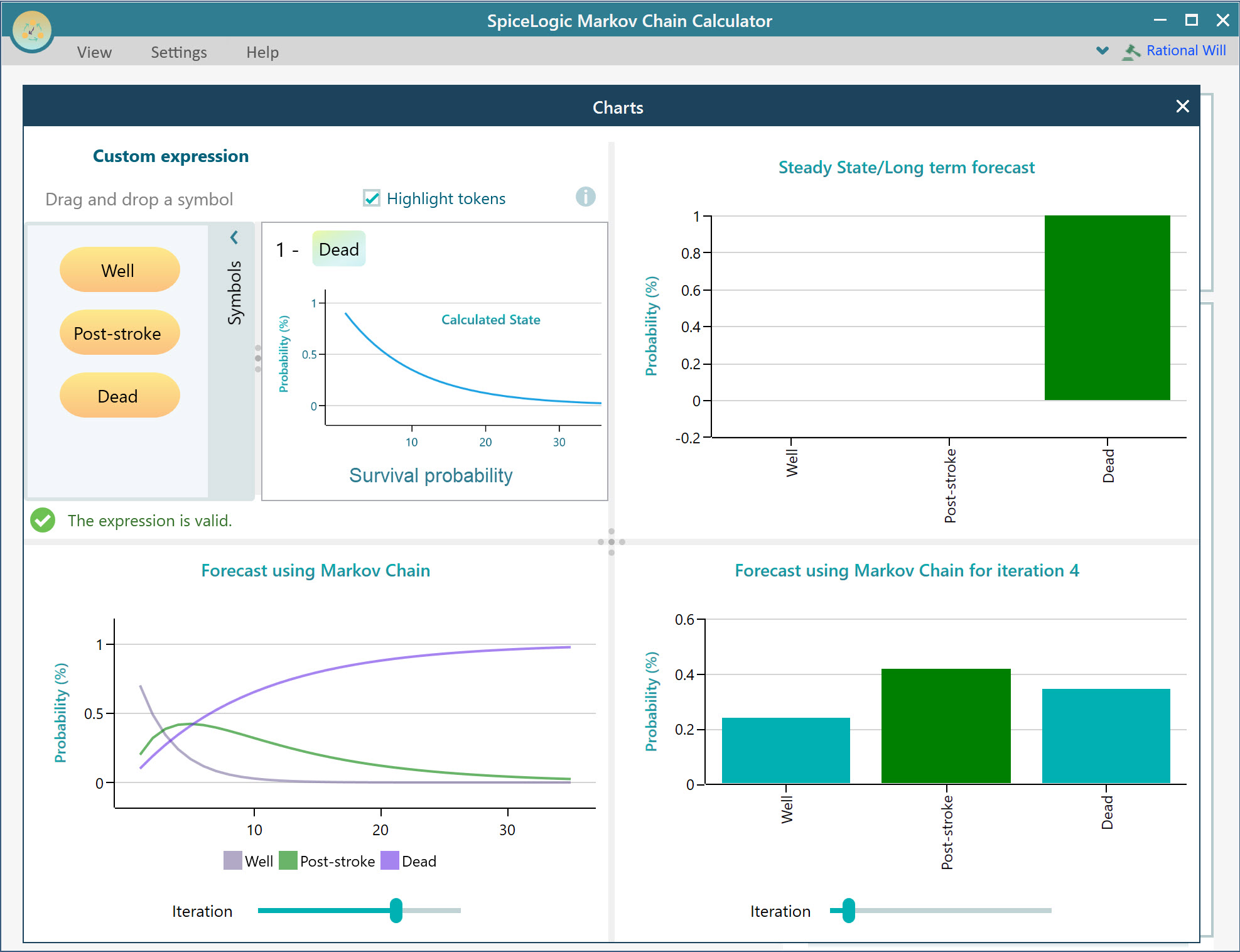

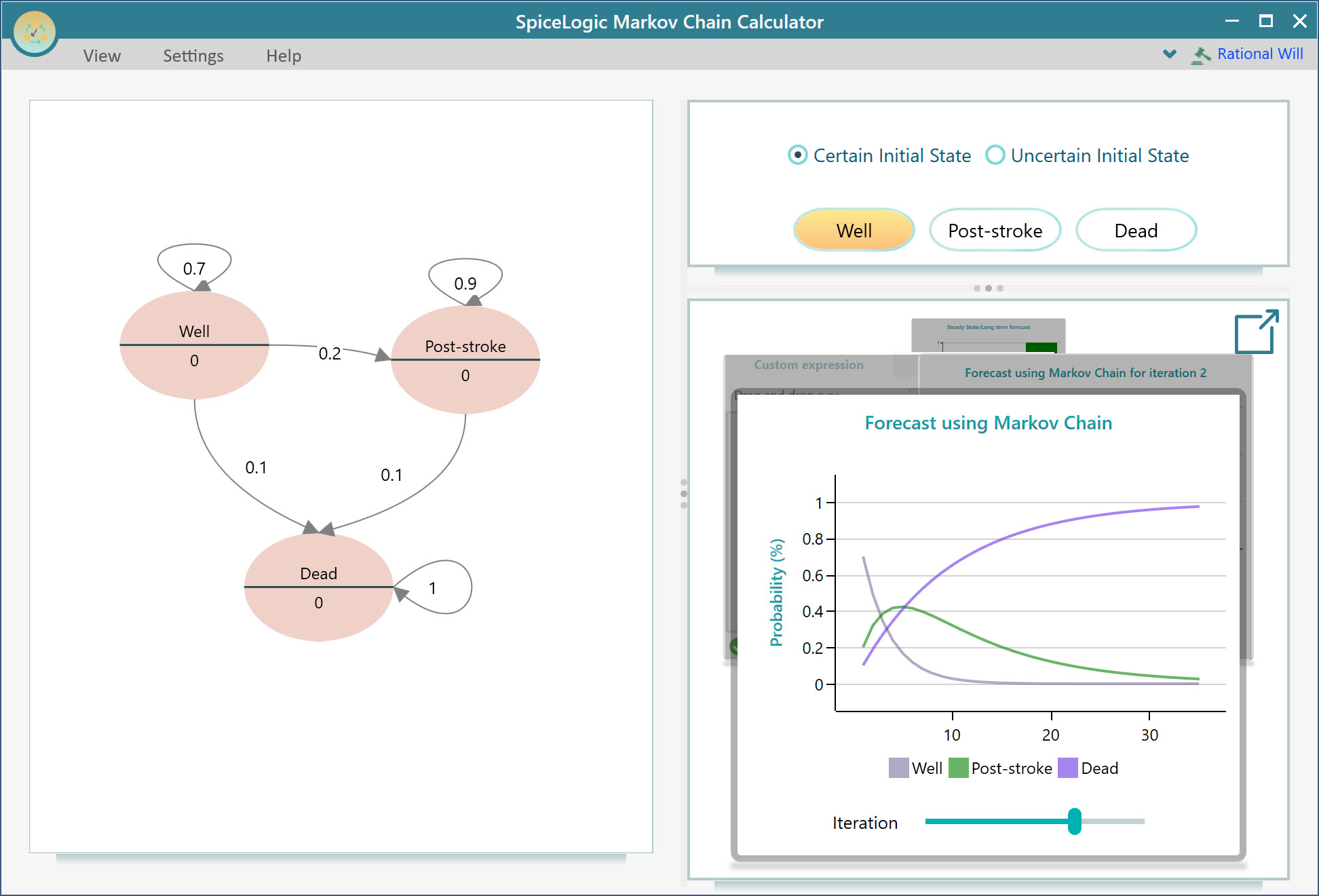

Markov Chain Calculator - Model and calculate Markov Chain easily using the Wizard-based software. - YouTube

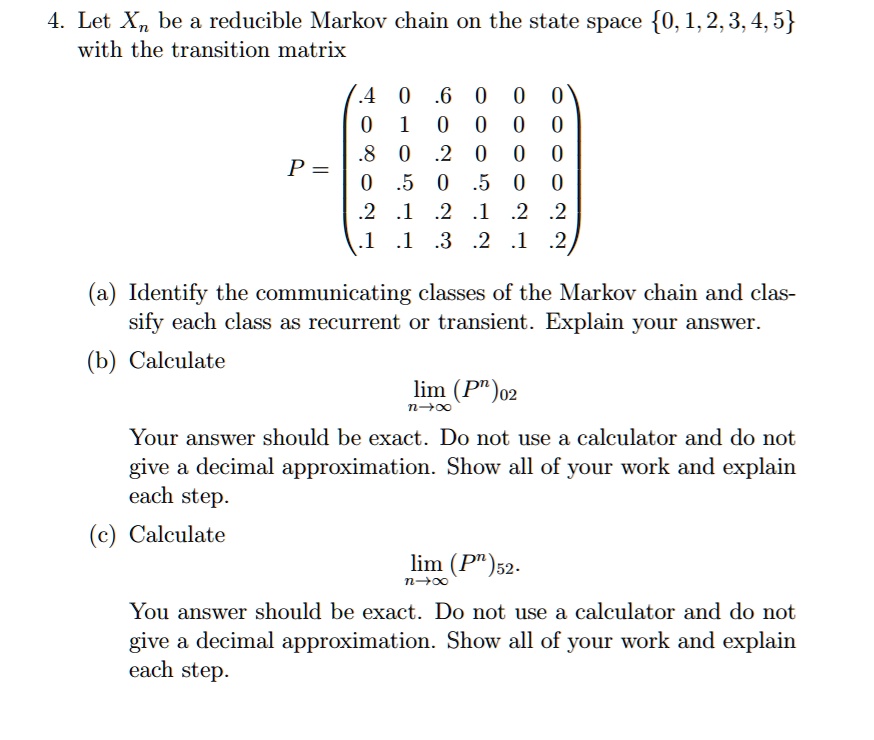

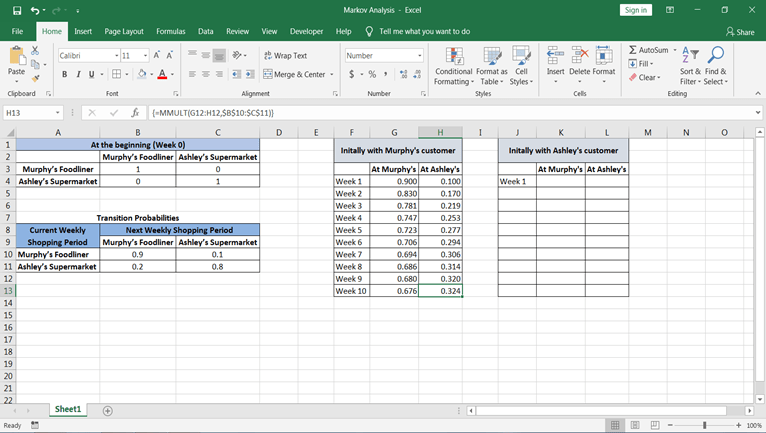

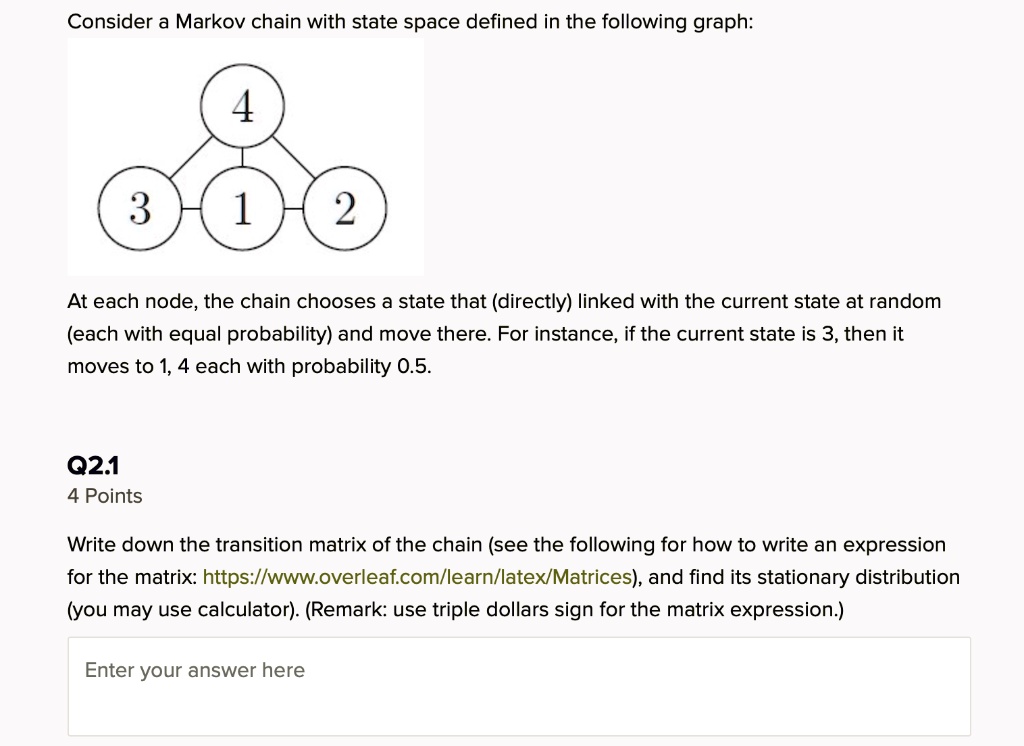

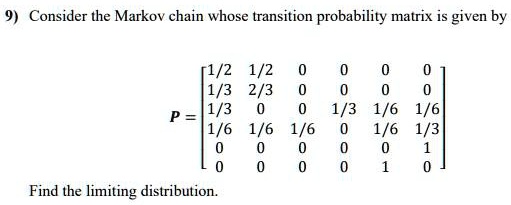

SOLVED: Consider the Markov chain whose transition probability matrix is given by 2/3 E 1/6 1/6 1/6 1/6 1/6 1/3 Find the limiting distribution

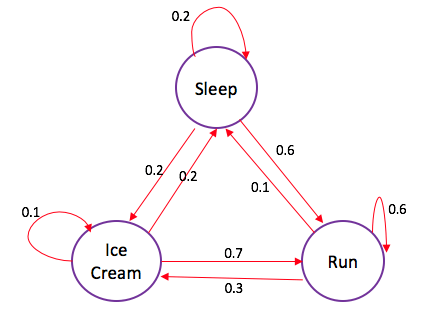

markov process - Example on how to calculate a probability of a sequence of observations in HMM - Cross Validated